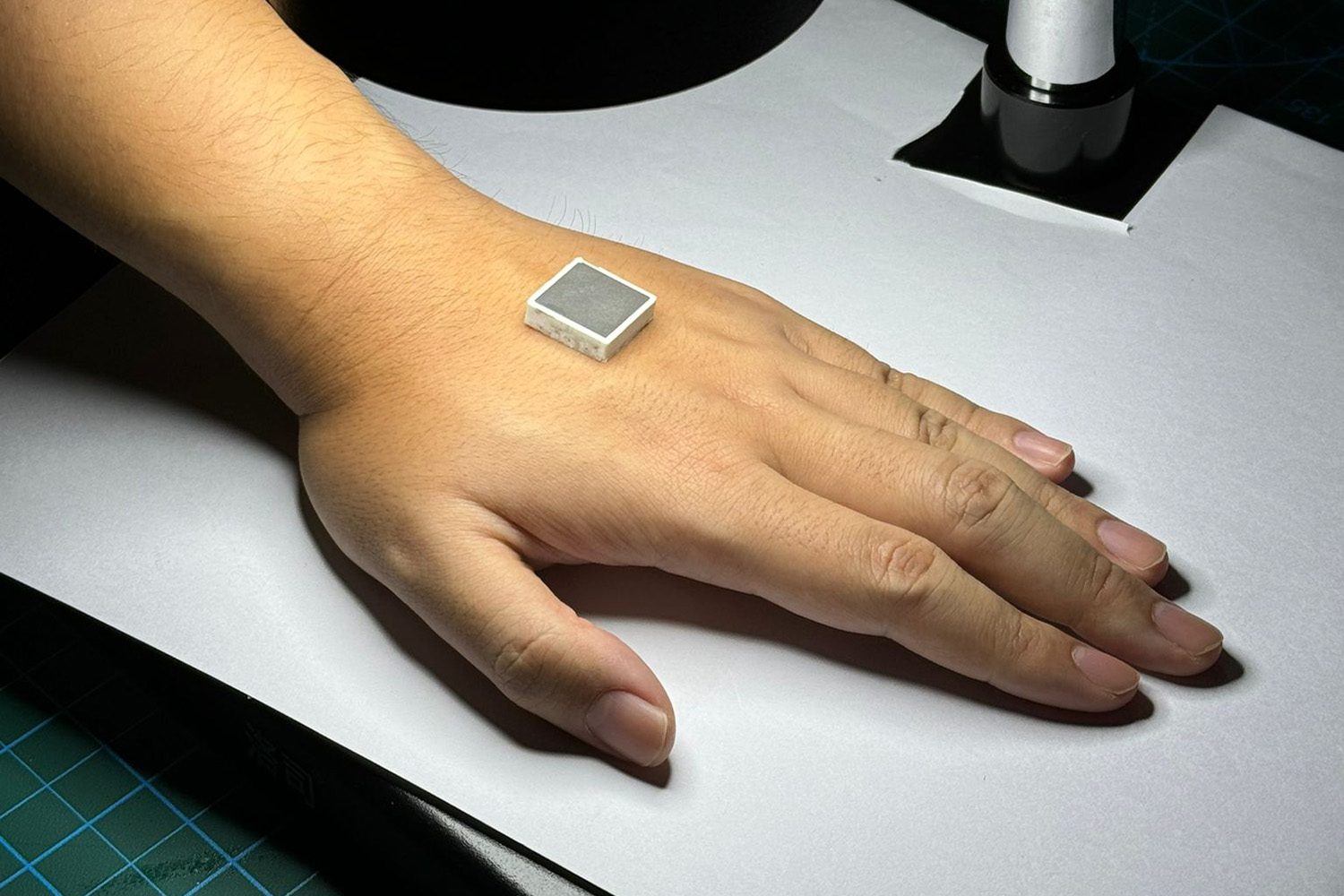

![We have been building and working on a local AI with memory and persistence [P]](https://preview.redd.it/o4cu1of74jjg1.jpeg?width=640&crop=smart&auto=webp&s=d0bff8fc7b80acfaa0e5850d852bdf6e44ed2afb) We have been building and working on a local AI with memory and persistence [P]

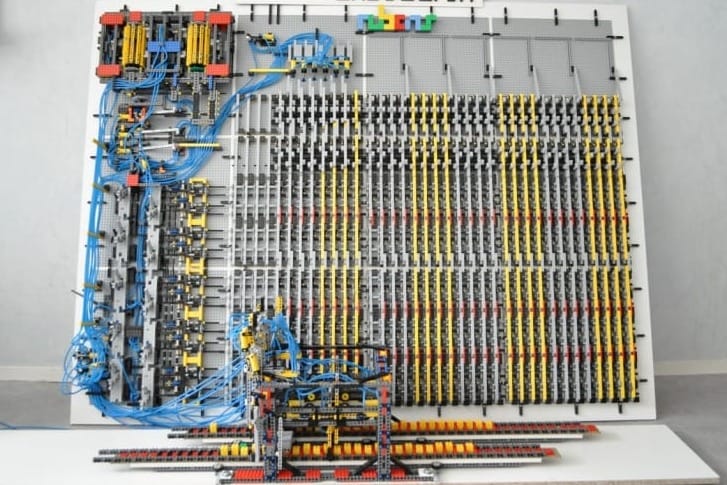

We have been building and working on a local AI with memory and persistence [P]

Your request has been blocked due to a network policy.

If you're running a script or application, please register or sign in with your developer credentials here.

Additionally make sure your User-Agent is not empty and is something unique and descriptive and try again.

if you're supplying an alternate User-Agent string, try changing back to default as that can sometimes result in a block.

If you think that we've incorrectly blocked you or you would like to discuss easier ways to get the data you want, please file a ticket here.

1 час назад @ reddit.com infomate

infomate![[P] I trained YOLOX from scratch to avoid Ultralytics' AGPL (aircraft detection on iOS)](https://external-preview.redd.it/VgxN_BHzj3QWKLjM_HicsmE5yLu-TPCy60DlF6DG4rc.jpeg?width=640&crop=smart&auto=webp&s=72bc4eed477ee4ac90ca31d43e2f609419964b72)

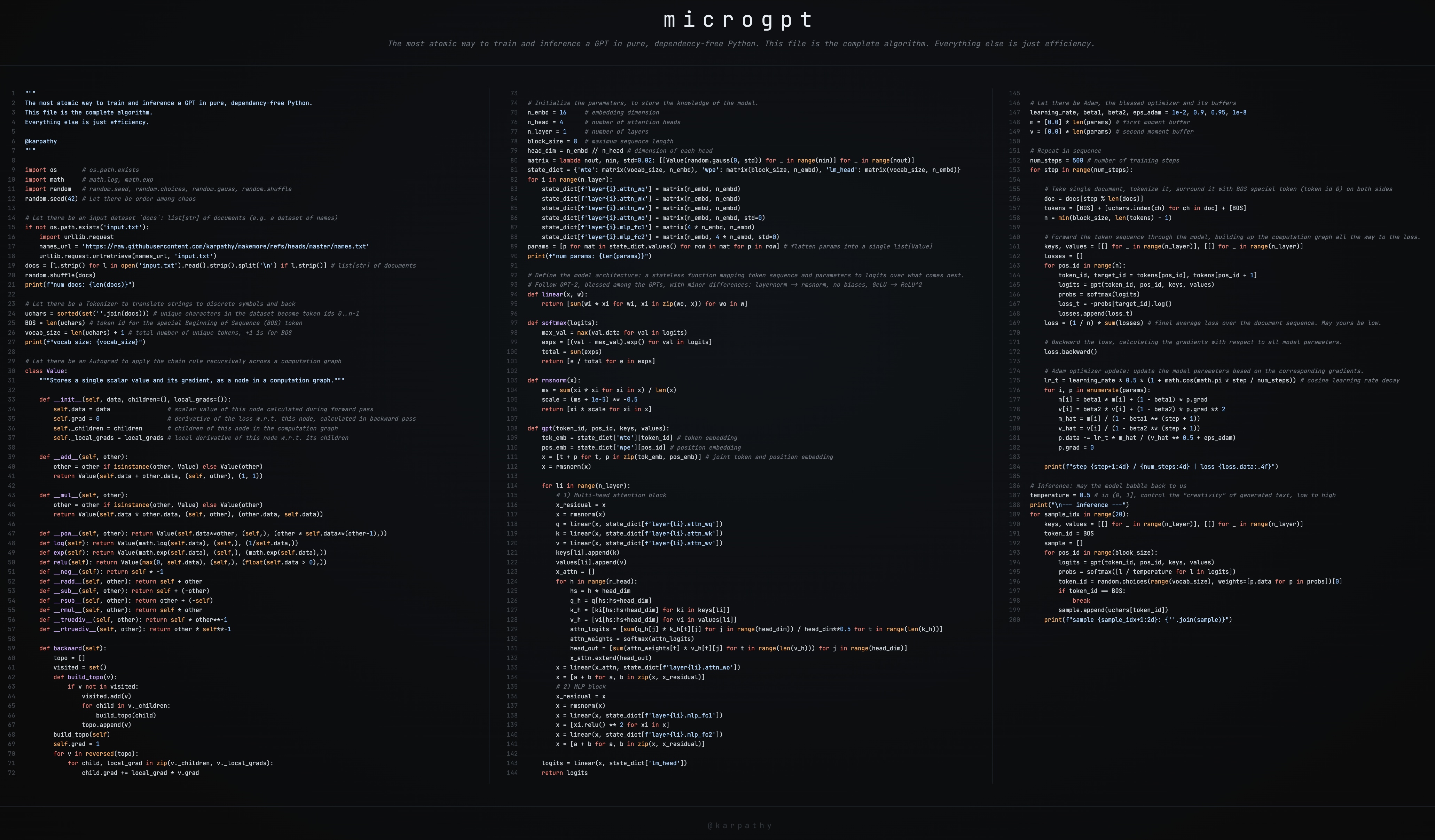

![[P] I built 16 single-file, zero-dependency Python implementations of every major AI algorithm — from tokenizers to quantization. No PyTorch. No TensorFlow. Just the math.](https://preview.redd.it/u0xorcdeufjg1.png?width=640&crop=smart&auto=webp&s=22fc00a0bacb36598fb03be62ce5ff7cf58ddbca)

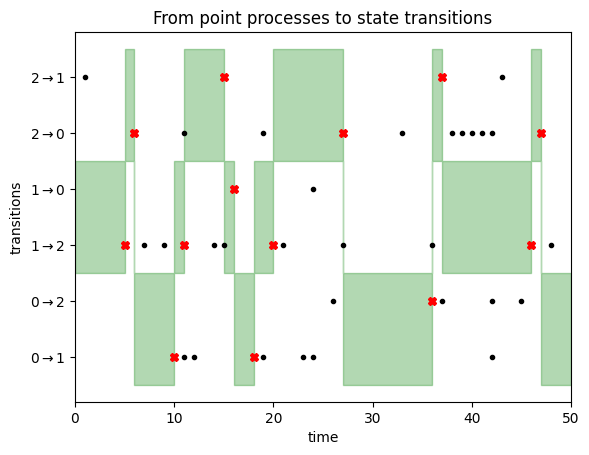

![[D] Asymmetric consensus thresholds for multi-annotator NER — valid approach or methodological smell?](https://preview.redd.it/aukkp6r9kfjg1.png?width=640&crop=smart&auto=webp&s=27b0d9dc5765dce73efcf5fc86defc958bdc04b9)

![[D] Interesting Gradient Norm Goes Down-Up-Down](https://preview.redd.it/hg2fed5u2ejg1.png?width=140&height=121&auto=webp&s=c61e1c948658db8d5b030e0293726d132eddbee5)

![[Paper Analysis] The Free Transformer (and some Variational Autoencoder stuff)](https://i.ytimg.com/vi/Nao16-6l6dQ/maxresdefault.jpg?sqp=-oaymwEmCIAKENAF8quKqQMa8AEB-AH-CYAC0AWKAgwIABABGHIgPShAMA8=&rs=AOn4CLCCEXG65dygUjCUjktnX7tvu0mMGQ)

![[Video Response] What Cloudflare's code mode misses about MCP and tool calling](https://i.ytimg.com/vi/0bpYCxv2qhw/maxresdefault.jpg?sqp=-oaymwEmCIAKENAF8quKqQMa8AEB-AH-CYAC0AWKAgwIABABGGUgZShlMA8=&rs=AOn4CLBR8X7VGdO9ZvdO-QBw3jtTUdf9jw)

![[Paper Analysis] On the Theoretical Limitations of Embedding-Based Retrieval (Warning: Rant)](https://i.ytimg.com/vi/zKohTkN0Fyk/maxresdefault.jpg?sqp=-oaymwEmCIAKENAF8quKqQMa8AEB-AH-CYAC0AWKAgwIABABGGYgZihmMA8=&rs=AOn4CLCrV5gwZvDK4dpEK4MdD2mchKcYRQ)

![Your Favorite AI Startup is Probably Bullshit (Ep. 298) [RB]](https://datascienceathome.com/wp-content/uploads/2023/12/dsh-cover-2-scaled.jpg#1783)