Your request has been blocked due to a network policy.

If you're running a script or application, please register or sign in with your developer credentials here.

Additionally make sure your User-Agent is not empty and is something unique and descriptive and try again.

if you're supplying an alternate User-Agent string, try changing back to default as that can sometimes result in a block.

If you think that we've incorrectly blocked you or you would like to discuss easier ways to get the data you want, please file a ticket here.

3 часа назад @ reddit.com infomate

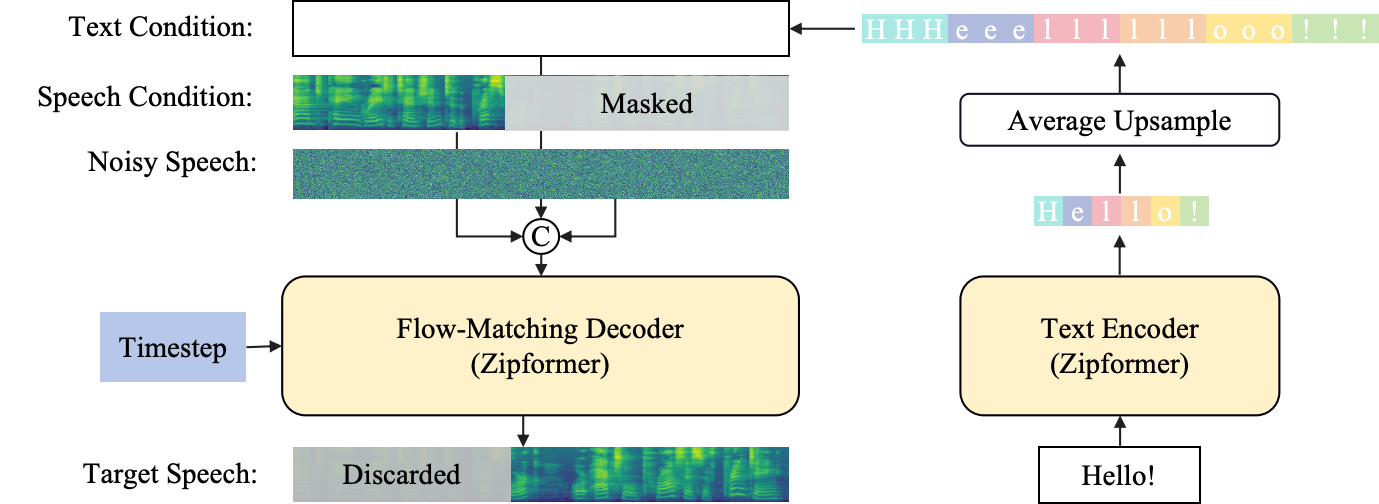

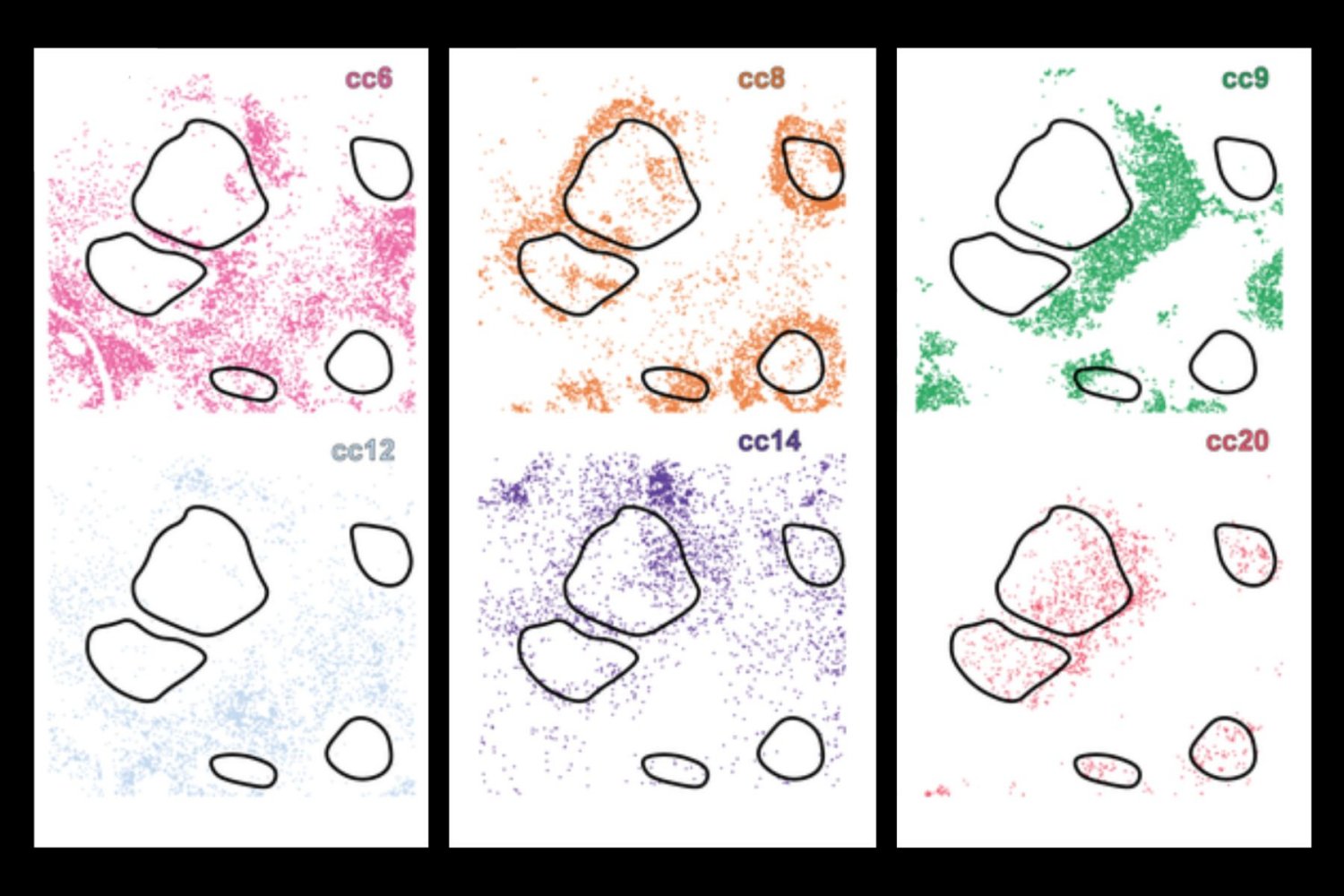

infomate![[P] Hill Space: Neural networks that actually do perfect arithmetic (10⁻¹⁶ precision)](https://preview.redd.it/d2rqnobr8gcf1.png?width=640&crop=smart&auto=webp&s=7120bdc27a055845f853d6da787a97524965a31f)

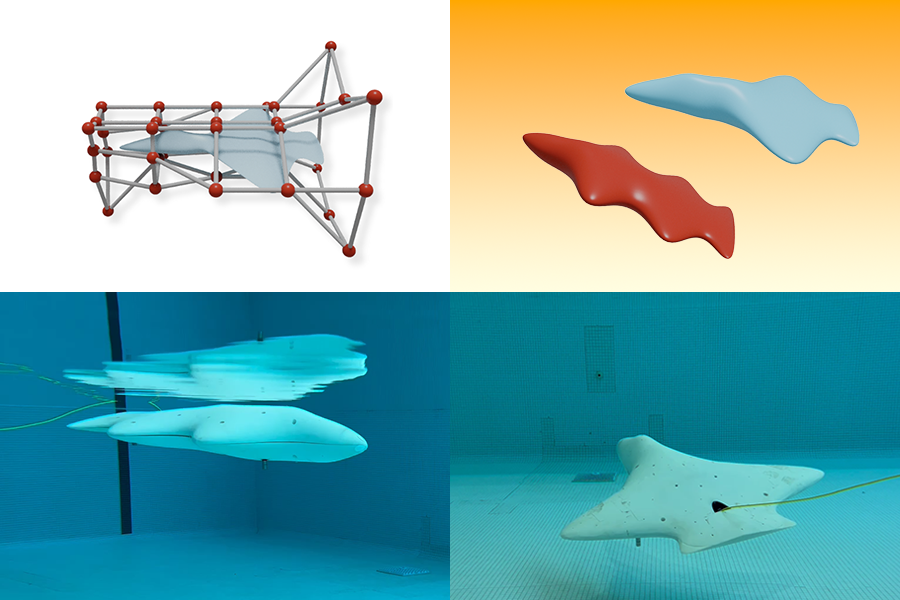

![[R] Join the Ultimate Challenge: Robopalooza – Space Robotics Competition For $5000!](https://external-preview.redd.it/lf8cx1CTeWTCx7evbexIvLeRrCnIgSex4ohMW16UWkU.jpeg?width=320&crop=smart&auto=webp&s=055c95b766b990b4f9425475140ee7faff3d1c7b)

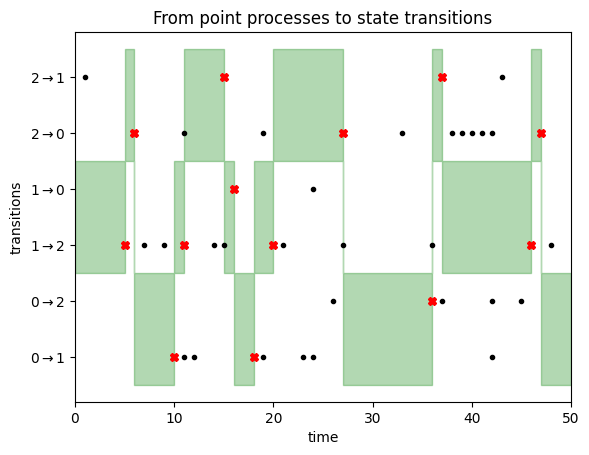

![[D] Modelling continuous non-Gaussian distributions?](https://b.thumbs.redditmedia.com/I_uSwWLgDEFBTAFoymmFo76LoxDaHgy1anfid7neaxs.jpg)

![Bayesian Deep Learning is Needed in the Age of Large-Scale AI [Paper Reflection]](https://i0.wp.com/neptune.ai/wp-content/uploads/2024/01/blog_feature_image_045902_8_6_3_1-1.jpg?fit=1200%2C630&ssl=1)

![Position: Understanding LLMs Requires More Than Statistical Generalization [Paper Reflection]](https://i0.wp.com/neptune.ai/wp-content/uploads/2024/01/blog_feature_image_045530_5_1_6_4-1.jpg?fit=1200%2C630&ssl=1)

![Tech’s Dumbest Mistake: Why Firing Programmers for AI Will Destroy Everything (Ep. 286) [RB]](https://datascienceathome.com/wp-content/uploads/2023/12/dsh-cover-2-scaled.jpg#1783)